I recently spoke at the Weapons of Mass Distribution conference hosted by 500 Startups. You can watch the video and read the slides and notes below.

Don’t Become a Victim of One Key Metric

The One Key Metric, North Star Metric, or One Metric That Matters has become standard operating procedure in startups as a way to manage a growing business. Pick a metric that correlates the most to success, and make sure it is an activity metric, not a vanity metric. In principle, this solves a lot of problems. It has people chasing problems that affect user engagement instead of top line metrics that look nice for the business. I have seen it abused multiple times though, and I’ll point to a few examples of how it can go wrong.

Let’s start with Pinterest. Pinterest is a complicated ecosystem. It involves content creators (the people who make the content we link to), content curators (the people who bring the content into Pinterest), and content consumers (the people who view and save that content). Similar to a marketplace, all of these have to work in concert to create a strong product. If no new content comes in, there are less new things to save or consume, leading to a less engaging experience. Pinterest has tried various times over the years to optimize this complex ecosystem using one key metric. At first, it was MAUs. Then it became clear that the company could optimize usage on the margin, instead of very engaged users. So, the company then thought about what metric really showed a person got value out of what Pinterest showed them. This led to the creation of a WARC, a weekly active repinner or clicker. A repin is a save of content already on Pinterest. A click is a clickthrough to the source of the content from Pinterest. Both indicate Pinterest showed you something interesting. A weekly event made it impossible to optimize for marginal activity.

There are two issues at play here. The first is the combination of two actions: a repin and a click. This creates what our head of product calls false rigor. You can do an experiment that increases WARCs that might actually trade off repins for clicks or vice versa and not even realize it because the combined metric increased. Take that to the extreme, and the algorithm optimizes clickbait images instead of really interesting content, and the metrics make it appear that engagement is increasing. It might be, but it is an empty calorie form that will affect engagement in a very negative way over the long term.

The second issue is how it ignores the supply side of the network entirely. No team wants to spend time on increasing unique content or surfacing new content more often when there is tried and true content that we know drives clicks and repins. This will cause content recycling and stale content for a service that wants to provide new ideas. Obviously, Pinterest doesn’t use WARCs anymore as its one key metric, but the search for one key metric at all for a complex ecosystem like Pinterest over-simplifies how the ecosystem works and prevents anyone from focusing on understanding the different elements of that ecosystem. You want the opposite to be true. You want everyone focused on understanding how different elements work together in this ecosystem. The one key metric can make you think that is not important.

Another example is Grubhub vs. Seamless. These were very similar businesses with different key metrics. Grubhub never subscribed entirely to the one key metric philosophy, so we always looked at quite a few metrics to analyze the health of the business. But if we were forced to boil it down to one, it would be revenue. Seamless used gross merchandise volume. On the surface, these two appear to be the same. If you break the metrics down though, you’ll notice one difference, and it had a profound impact on how the businesses ran.

One way to think of it is that revenue is a subset of GMV, therefore GMV is a better metric to focus on. Another way to think of it is the reverse. Revenue equals GMV multiplied by a commission rate for the marketplace. So, what did they do differently because of this change? Well, Seamless optimized for orders and order size, as that increased GMV. Grubhub optimized for orders, order size, and average commission rate. So, while Seamless would show restaurants in alphabetical order in their search results, Grubhub sorted restaurants by the average commission we made from their orders. Later on, Grubhub had the opportunity to test average commission of a restaurant along with its conversion rate, to maximize that an order would happen and maximize its commission for the business. When GrubHub and Seamless became one company, this was one of the first changes that was made to the Seamless model as it would drastically increase revenue for the business even though it didn’t affect GMV.

This is not to say that revenue is a great one key metric. It may be better than GMV, but it’s not a good one. Homejoy, a service for cleaners, optimized for revenue. Their team found it was easier to optimize for revenue by driving first time use instead of repeat engagement. As a result, their retention rates were terrible, and they eventually shut down.

Startups are complicated businesses. Fooling anyone at the company that only one metric matters oversimplifies what is important to work on, and can create tradeoffs that companies don’t realize they are making. Figure out the portfolio of metrics that matter for a business and track them all religiously. You will always have to make tradeoffs between metrics in business, but they should be done explicitly and not hide opportunities.

Currently listening to A Mineral Love by Bibio.

The Right Way To Set Goals for Growth

Many people know growth teams experiment with their product to drive growth. But how should growth teams set goals? At Pinterest, we’ve experimented with how we set goals too. I’ll walk you through where we started, some learning along the way, and the way we try to set goals now.

Mistake #1: Seasonality

Flash back to early 2014. I started product managing the SEO team at Pinterest. The goal we set was a 30% improvement in SEO traffic. Two weeks in we hit the goal. Time to celebrate, right? No. The team didn’t do anything. We saw a huge seasonal lift that raised the traffic without team interference. Teams should take credit for what they do, not for what happens naturally. What happens when seasonality drops traffic 20%? Does the team get blamed for that? (We did, the first time.) So, we built an SEO experiment framework to actually track our contribution.

Mistake #2: Only Using Experiment Data

So, we then started setting goals that were entirely based on experiment results. Our key result would look something like “Increase traffic 20% in Germany Q/Q non-seasonally as measured by experiments” with a raw number representing what a 20% was. Let’s say it was 100K. Around this time though, we also started investing a lot in infrastructure as a growth team. For example, we created local landing pages from scratch. We had our local teams fix a lot of linking issues. You can’t create an experiment on new pages. The control is zero. So, at the end of the quarter, we looked at our experiments, and only saw a lift of around 60K. When we look at our German site though, traffic was up 300%. The landing pages started accruing a lot of local traffic not accounted for in our experiment data.

Mistake #3: Not Factoring In Mix Shift

In that same quarter, we beat our traffic and conversion goals, but came up short on company goal for signups. Why did that happen? Well, one of the major factors was mix shift. What does mix shift mean? Well, if you grow traffic to a lower converting country (Germany) away from a higher converting country (U.S.), you will hit traffic goals, but not signup goals. Also, if you end up switching between page types you drive traffic to/convert (Pin pages convert worse than boards, for example), or if you switch between platform (start driving more mobile traffic, which converts lower, but has higher activation), you will miss goals.

Mistake #4: Setting Percent Change Goals

On our Activation team, we set goals that looked like “10% improvement in activation rate.” That sounds like a lofty goal, right? Well, let’s do the math. Let’s say you have an activation rate of 20%. What most people read when they see a 10% improvement is “oh, you’re going to move it to 30%.” But that’s not what that means. It’s a relative percent change, meaning the goal is a 2% improvement. With this tactic, you can set goals that look impressive that don’t actually move the business forward.

Mistake #5: Goaling on Rates

Speaking again about that activation goal, there’s actually two issues. The last paragraph talked about the first part, the percent change. The rate is also an issue. An activation rate is two numbers: activated users / total users. There are two ways to move that metric in either direction: change activated users or change total users. What happens when you goal on rates is you have an activation team that wants less users so they hit their rate goals. So, if the traffic or conversion teams identify ways to bring in more users at slightly lower activation rates, the activation team misses their goal.

Best Approach: Set Absolute Goals

What you really care about for a business like Pinterest is increasing the total number of activated users. At real scale, you also care about decreasing churned users because for many business re-acquiring churned users is harder than acquiring someone for the first time. So, those should be the goals: active users and decrease in churned users. Absolute numbers are what matter in growth. What we do now at Pinterest is set absolute goals, and we make sure we account for seasonality, mix shift, experiment data as well as infrastructure work to hit those goals.

Currently listening to RY30 Trax by µ-ziq.

When Experimenting, Know If You’re Optimizing or Diverging

When I first joined the growth team at Pinterest, in one of the early syncs a fellow PM presented a new onboarding experience for iOS. The experience was well-reasoned and an obvious improvement to how we were currently educating new users on how to get value out of the product. After the presentation, someone on the team asked about how the experiment for it was going to work. At this point, the meeting devolved into debate about how they would break out all these new experiences into chunks and what each experience needed to measure. It was liking watching a car wreck take place. I knew something went seriously wrong, but I didn’t know how to change it. The team had an obviously superior product experience, but couldn’t get to a point where they were ready to put it in front of users because of a lack of experimentation plan to measure what would cause the improvement in performance.

After I took over a new team in growth, I was asked to present a product strategy for it to the CEO, and the heads of product, eng, and design. Instead of writing about the product strategy, I wrote an operational strategy first. It was just as important for the team and this group of executives to know how we operated as it was to know what we planned for our operation. One of these components of the operational plan was a mix of optimization vs. divergent thinking. Growth frequently suffers from a local maxima problem, where it finds a playbook that works and optimizes it until it receives more and more marginal gains, making the team less effective, and hiding new opportunities not based on the original tactic. Playbooks are great, and when you have one, you should optimize every component of it for maximum success. But optimization shouldn’t blind teams to new opportunities. In the operational strategy, the first section was about questioning assumptions. If an experiment direction showed three straight results of more and more marginal improvements, the team had to trigger a project in the same area with a totally divergent approach.

This is where our previous growth meeting ran into trouble. When tackling a divergent approach as an experiment, you will either start in one of two directions. One is that the team steps back and comes up with a much better solution than what it was optimizing before, and are pretty confident it will beat the control. The other is the team determines a few different approaches, and they are not sure which ones if any will work. In both cases, it’s impossible to just change one variable from the control to isolate the impact of changes in the experience.

In this scenario, the right solution is not to isolate tons of variables in the new experience vs. the control group. It is to ship an MVP of the totally new experience vs. the control. You do a little bit more development work, but get much closer to validation or invalidation of the new approach. Then, if that new approach is successful, you can look at your data to see which interactions might be driving the lift, and take away components to isolate the variables after you have a winning holistic design.

If you’re optimizing an existing direction, isolating variables is key to learning what impacts your goals. When going in new directions though, isolating variables sets back learning time and may actually prevent you from finding the winning experience. So, when performing experiments, designate whether you are in optimization mode or divergent mode, and pick the appropriate experimentation plan for each.

Currently listening to Oh No by Jessy Lanza.

The Present and Future of Growth

Quite a few people ask me about the future of growth. The idea of having a team dedicated the growth in usage of a product is still a fairly new construct to organizations. More junior folks or people less involved with growth always ask about the split between marketing and growth. More senior folks always ask about the split between growth and core product. Growth butts heads with both sides.

Why do more senior folks tend to turn to the difference between core product and growth than marketing? For this I’ll take a step beck. Now, I’m a marketer by trade. I have an undergraduate degree in marketing and an MBA with a concentration in marketing. So I consider everything marketing: product, growth, research, and I’ve written about that. I used to see what was happening in tech as marketing’s death by a thousand cuts. I now more so see it as marketing’s definition has gotten so broad and each individual component so complicated that it can by no means be managed by one group in a company.

So if marketing is being split into different, more focused functions, growth teams aren’t really butting heads with the remaining functions that are still called marketing over responsibilities like branding. They are butting heads with the core product team over the allocation of resources and real estate for the product.

So how do growth team and core product teams split those work streams today, and what does the future look like? The best definition I can give to that split for most companies today is that growth teams focus on getting the maximum amount of users to experience the current value of the product or removing the friction that prevents people from experiencing current value, and core product teams focus on increasing the value of the product. So, when products are just forming, there is no growth team, because the product is just beginning to try to create value for users. During the growth phase, introducing more people to the current value of the product becomes more important and plays in parallel with improving the value of that core product. For late stage companies, core product teams need to introduce totally new value into the product so that growth isn’t saturated.

My hope is that in the future, this tradeoff between connecting people to current value, improving current value, and creating totally new value is all managed deftly by one product team. That team can either have product people naturally managing the tradeoffs between these three pillars, or three separate teams that ebb and flow in size depending on the strategic priorities of the organization. All three of these initiatives – connecting people to current value, improving current value, and creating new value – are important to creating a successful company, but at different stages of a company, one or two tend to be more important than another.

We should evolve into product organizations that can detect which of these three functions adds the most value at a particular point in time naturally, fund them appropriately, and socialize the reasons for that into the organization so these different functions don’t butt heads in the future. I believe that is the product team of the future. I now believe this is more likely than marketers evolving to manage branding, research, performance marketing, and product effectively under one organization.

Currently listening to Good Luck And Do Your Best by Gold Panda.

If It Ain’t Fixed, Don’t Break It

Frequently, products achieve popularity out of nowhere. People don’t realize why or how a product got so popular, but it did. Now, much of the time, this is from years of hard work no one ever saw. As our co-founder at GrubHub put it, “we were an overnight success seven years in the making.” But sometimes, it really just does happen without people, inside or outside the company, knowing why. Especially with social products, sometimes things just take off. When you’re in one of these situations, you can do a couple of things to your product: not change it until you understand why it’s successful now, or try to harness what you understand into something better that fits your vision. This second approach can be a killer for startups, and I’ve seen it happen multiple times.

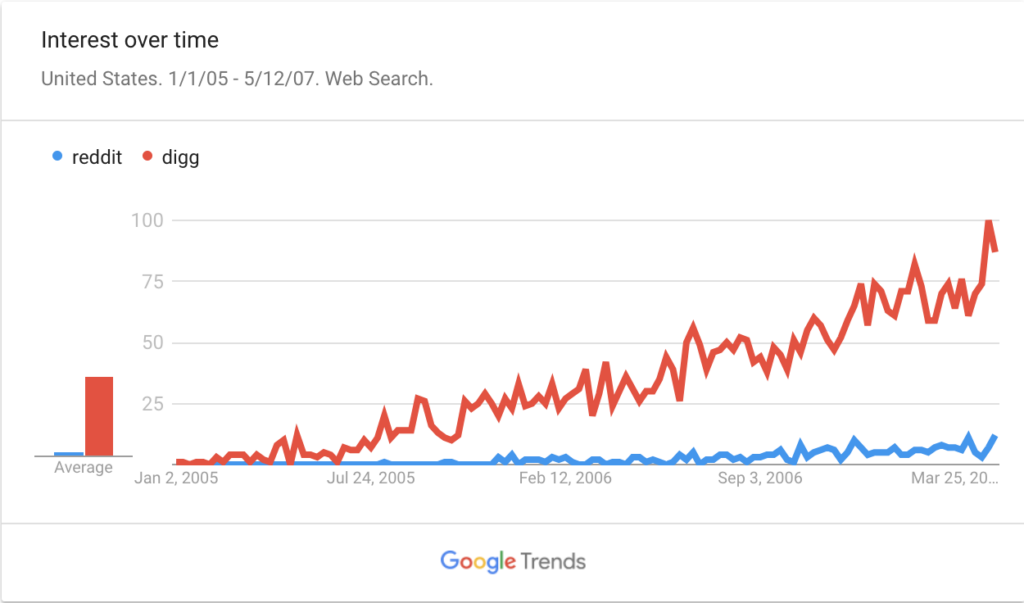

Let’s take two examples in the same space: Reddit and Digg. Both launched within six months of each other with missions to curate the best stories across the internet. Both became popular in sensational, but somewhat different ways, but Digg was clearly in breakout mode.

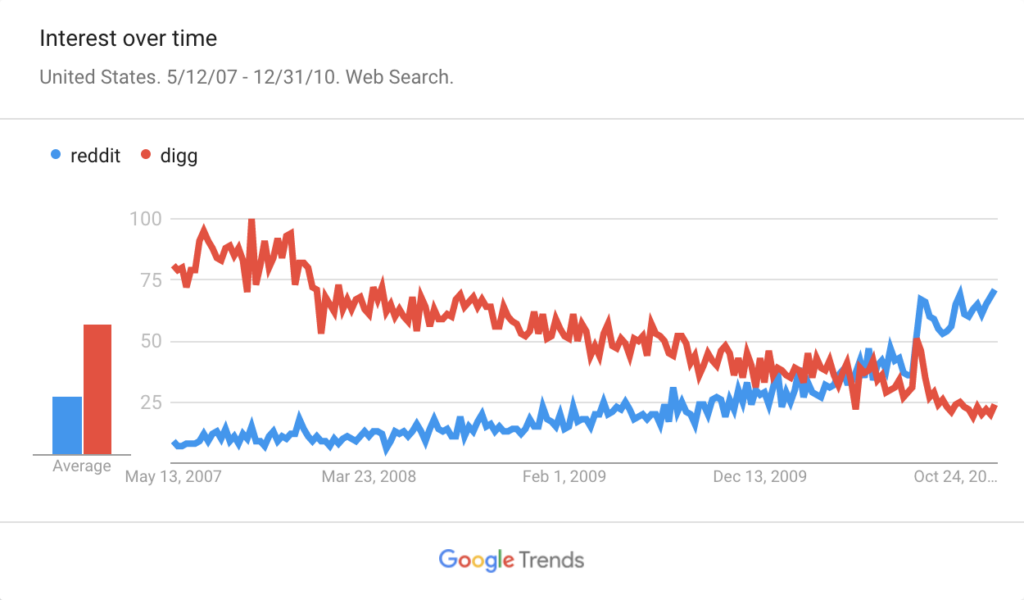

What happened after the end of that graph is a pretty interesting AB test. Digg kept changing things up, launching redesigns and changing policies. Some of these might have been experiments that showed positive metric increases even. Reddit kept the same design and the same features, allowing new “features” to come from the community via subreddits, like AMA. By the launch of Digg’s major redesign in August of 2010 (intended to take on elements from Twitter), Reddit exploded ahead of Digg.

This is what the long term result of these two strategies look like. Digg is a footnote of the internet, and Reddit is now a major force.

Now, neither of these companies are ideal scenarios. The best option in the situations these companies found themselves in is to deeply understand the value their product provides and to which customers, and to completely devote your team to increasing and expanding that value over time. But, if you can’t figure out exactly why something is working, it is better to do nothing then to start messing with your product in a way that may adversely affect the user experience. This has become one of my unintuitive laws of startups: if ain’t fixed, don’t break it. If you don’t know why something is working (meaning it’s fixed and not a variable), do nothing else but explore why the ecosystem works, and don’t change it until you do. If you can’t figure it out, it’s better to change nothing like Reddit and Craigslist than to take a shot in the dark like Digg.

Currently listening to Sisters by Odd Nosdam.

The Mobile Equation

One under-represented area of growth optimization is the mobile web to app handoff and tradeoff. This area of growth is about the key decision of what to do when someone arrives at your website on a mobile device. Do you attempt to get them to sign up or transact on our mobile website? Do you prompt for a mobile app download? Do you make mobile web available at all? Different companies have made different decisions of what to do here. What I want to talk about is how to make that decision with data instead of just on a “strategic” whim.

To do this, you need to know your company’s mobile equation. The mobile equation is: when I optimize for an app install instead of mobile web usage when someone lands on my mobile website, do I receive more or less engaged users? The way to answer this question is to experiment. Optimize some people towards mobile web usage, and some people toward mobile app download. Cohort these users, and see which group has more engagement over the long term.

Keep in mind that engagement can also be optimized though, and where the baseline ends up is the result of multiple factors:

- the quality of mobile app onboarding

- the quality of mobile website onboarding

- the quality of the mobile website itself

- the quality of the mobile app itself

- the quality of the mobile app prompt

- where you prompt for app download

- the country of the user

- the landing page of the mobile website

At Pinterest, when we did this baseline, even though prompting for app download decreased signups significantly, we still received more users by optimizing for mobile app download because the activation rate was so much higher. That meant more people got more long term value from Pinterest by a little more friction upfront. So, we got to work in optimizing all of the steps of the mobile app funnel. We tested over 15 different app interstitial concepts. We redesigned the mobile app signup flow multiple times. We made the mobile app faster. We also started preserving the context from what you were looking at on mobile web to what we first showed you on the mobile app. We saw significant increases in engaged use from all of these experiments.

Learn your mobile equation. It will help drive your strategy as well as some key growth opportunities.

Currently listening to Rojus by Leon Vynehall.

This Week in Startups Presentation

I recently spoke at the Launch Incubator, and it was featured on This Week in Startups. You can watch the video below as well as view the slides.

Hiring Startup Executives

I was meeting with a startup founder last week, and he started chatting about some advice he got after his latest round of investment about bringing in a senior management team. He then said he spent the last year doing that. I stopped him right there and asked “Are you batting .500?”. Only about half of those executives were still at the company, and the company promoted from within generally to fill those roles after the executives left. The reason I was able to ask about that batting average is that I have see this happen at many startups before. The new investor asks them to beef up their management team, so the founders recruit talent from bigger companies, and the company experiences, as this founder put it, “organ rejection” way too often.

This advice from investors to scaling companies is very common, but I wish those investors would provide more advice on who actually is a good fit for startup executive roles. Startups are very special animals, and they have different stages. Many founders look for executives at companies they want to emulate someday, but don’t test for if that executive can scale down to their smaller environment. There are many executives that are great for public companies, but terrible for startups, and many executives that are great at one stage of a startup, but terrible for others. What founders need to screen for, I might argue about all else, is adaptability and pragmatism.

Why is adaptability important? Because it will be something that is tested every day starting the first day. The startup will have less process, less infrastructure, and a different way of accomplishing things than the executive is used to. Executives that are poor fits for startup will try to copy and paste the approach from their (usually much bigger) former company without adapting it to stage, talent, or business model. It’s easy for founders to be fooled by this early on because they think “this is why I hired this person – to bring in best practices”. That is wrong. Great startup executives spend all their time starting out learning about how an organization works so they can create new processes and ways of accomplishing things that will enhance what the startup is already doing. When we brought on a VP of Marketing at GrubHub, she spent all her time soaking up what was going on and not making any personnel changes. It turns out she didn’t need to make many to be successful. We were growing faster, had a new brand and better coverage of our marketing initiatives by adding only two people and one consultant in the first year.

Why is pragmatism important? As many startups forgot over the last couple of years, startups are on a timer. The timer is the amount of runway you have, and what the startups needs to do is find a sustainable model before that timer gets to zero. Poor startup executives have their way of doing things, and that is usually correlated with needing to create a very big team. They will want to do this as soon as possible, with accelerates burn, shortening the runway before doing anything that will speed up the ability to find a sustainable model. I remember meeting with a new startup exec, and had her run me through her plan for building a team. She was in maybe her second week, and at the end of our conversation I counted at least 15 hires she needed to make. I thought, “this isn’t going work.” She lasted about six months. A good startup executive learns before hiring, and tries things before committing to them fully. Once they know something works, they try to build scale and infrastructure around it. A good startup executive thinks in terms of costs: opportunity costs, capital costs, and payroll. Good executives will trade on opportunity costs and capital costs before payroll because salaries are generally the most expensive and the hardest to change without serious morale implications (layoffs, salary reductions, et al.).

Startup founders shouldn’t feel like batting .500 is good in executive hiring. Let’s all strive to improve that average by searching for the right people from the start by testing for adaptability and pragmatism. You’ll hire a better team, cause less churn on your team, and be more productive.

Product-Market Fit Requires Arbitrage

One of the most discussed topics for startup is product-market fit. Popularized by Marc Andreessen, product-market fit is defined as:

Product/market fit means being in a good market with a product that can satisfy that market.

Various growth people have attempted to quantify if you have reached product-market fit. Sean Ellis uses a survey model. Brian Balfour uses a cohort model. I prefer Brian’s approach here, but it’s missing an element that’s crucial to growing a business that I want to talk about.

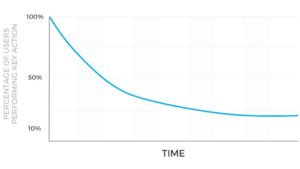

First, let’s talk about what’s key about Brian’s model, a flattened retention curve. This is crucial as it shows a segment of people finding long term value in a product. So, let’s look at what the retention curve shows us. It shows us the usage rates of the aggregation of users during a period of time, say, one month. If you need help building a retention curve, read this. A retention curve that is a candidate for product-market fit looks like this:

The y axis is the percent of users doing the core action of a product. The x axis in this case is months, but it can be any time unit that makes sense for the business. What makes this usage pattern a candidate for product-market fit is that the curve flattens, and does fairly quickly i.e. less than one year. What else do you need to know if you are at product-market fit? Well, how much revenue that curve represents per user, and can I acquire more people at a price less than that revenue.

If you are a revenue generating business, a cohort analysis can determine a lifetime value. If the core action is revenue generating, you can do one cohort for number of people who did at least one action, and another cohort for actions per user during the period, and another cohort for average transaction size for those who did the core action. All of this together signifies a lifetime value (active users x times active x revenue per transaction).

Now, an important decision for every startup is how do you define lifetime. I prefer to simplify this question instead to what is your intended payback period. What that means is how long you are willing to wait for an amount spent on a new user to get paid back to the business via that user’s transactions. Obviously, every founder would like that to be on first purchase if possible, but that rarely is possible. The best way to answer this question is to look with your data how far out you can reasonably predict what users who come in today will do with some accuracy. For startups, this typically is not very far into the future, maybe three months. I typically advise startups to start at three months and increase it to six months over time. Later stage startups typically move to one year. I rarely would advise a company to have a payback period longer than one year as you need to start factoring in the time value of money, and predicting that far into the future is very hard for all but the most stable businesses.

So, if you have your retention curve and your payback period, to truly know if you are at product-market fit, you have to ask: can I acquire more customers at a price where I hit my payback period? If you can, you are at product-market fit, which means it’s time to focus on growth and scaling. If you can’t, you are not, and need to focus on improving your product. You either need to make more money per transaction or increase the amount of times users transact.

Some of you might be asking: what if you don’t have a business model yet? The answer is simple then. Have a retention curve that flattens, and be able to grow customers organically at that same curve. If you can’t do that and need to spend money on advertising to grow, you are not at product-market fit.

Other might also ask: what if you are a marketplace where acquisition can take place on both sides? If you acquire users on both sides at the wrong payback period, you’ll spend more than you’ll ever make. Well, most marketplaces use one side that they pay for to attract another side organically. Another strategy is to treat the supply side as a sunk cost because there are a finite amount of them. The last strategy here is to set very conservative payback periods on both supply and demand sides so that in addition they nowhere near add up to something more than the aggregate lifetime value for the company.

Currently listening to From Joy by Kyle Hall.